Past, Present, Future uses immersive media to restore access and living context now, while physical restitution efforts continue. The pipeline below outlines how assets move from capture to exhibition.

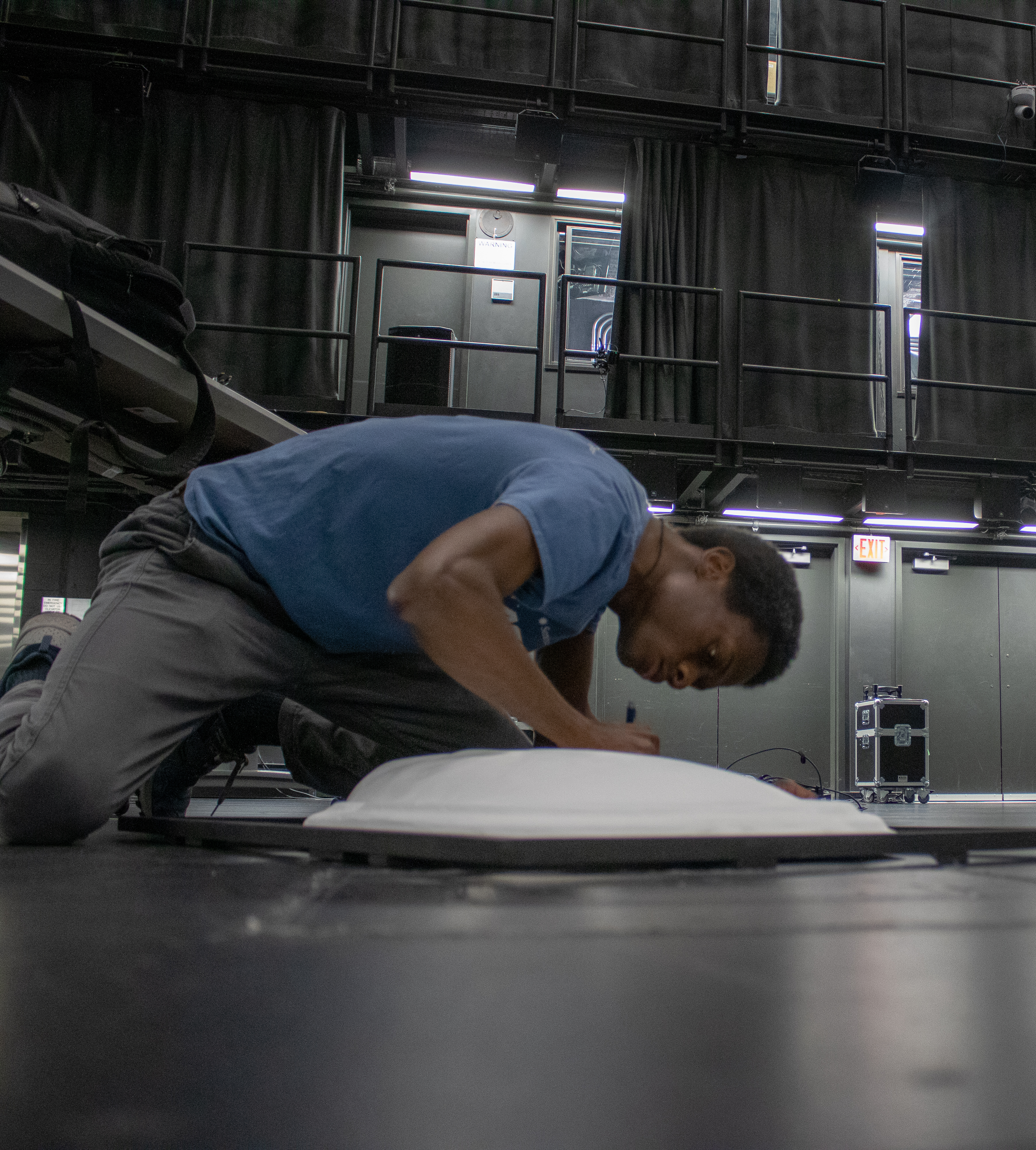

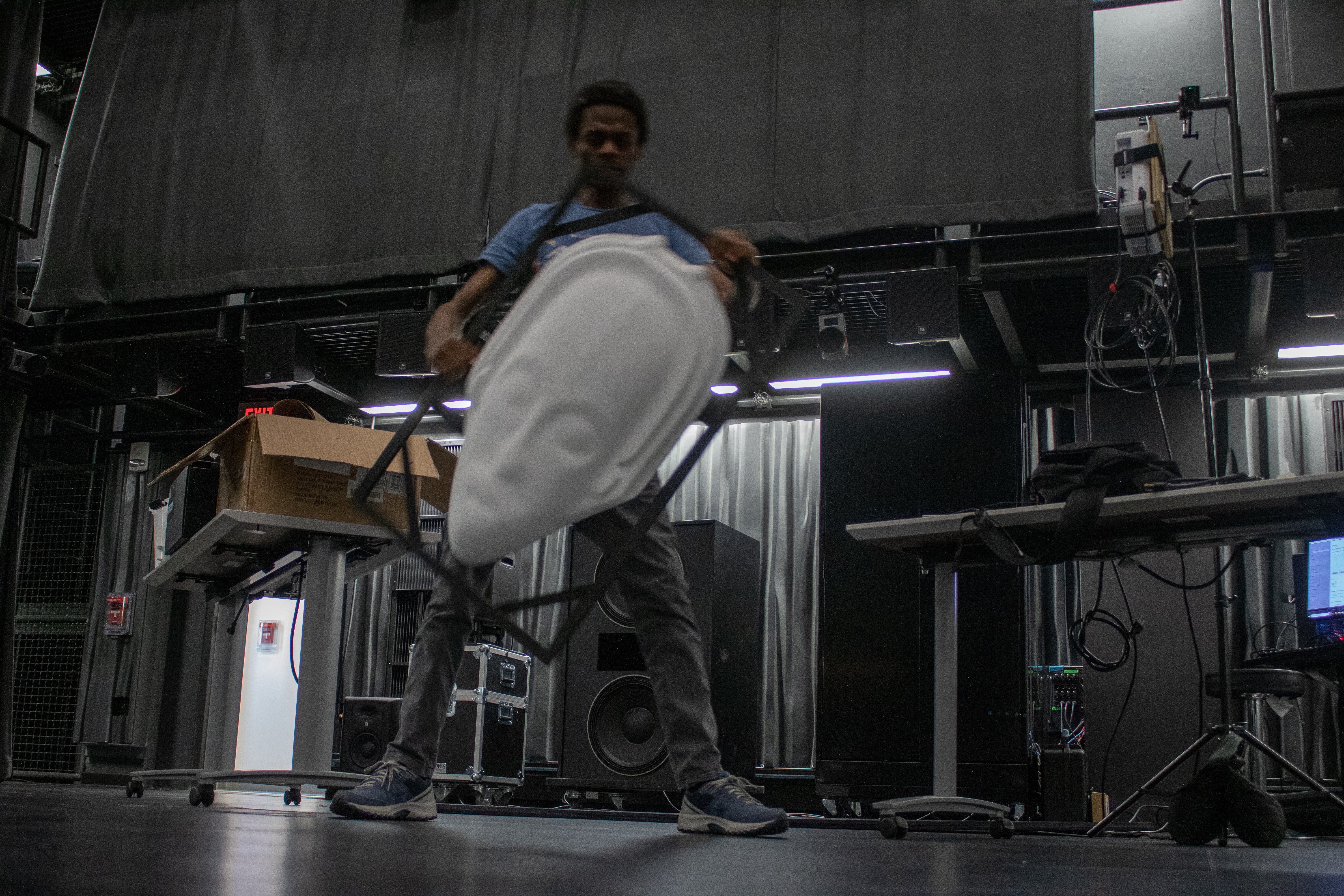

Scanning with Photogrammetry

Following the selection of pieces from the Miller collection, I photographed each artifacts from multiple angles (150–500 images per object), then processed into detailed 3D meshes and textures, aiming for “water-tight” digital replicas suitable for immersive environments, to create high-fidelity models.

3D Asset Pipeline (ZBrush, Substance, Stager)

After scanning, the raw 3D models were cleaned, retopologized, re-textured, and optimized. High-poly meshes were refined and reduced both during processing and in ZBrush, surface details and materials were rebuilt in Substance Painter, and final assets were prepared and rendered in either autodesk Maya or Adobe Stager..

Virtual Reality Worldbuilding (Unreal Engine)

The immersive environment was built as a virtual reality world using Unreal Engine. I designed the landscape, lighting, and interactions to place recontextualized artifacts inside a living, symbolic environment where time, atmosphere, and narrative all work together.

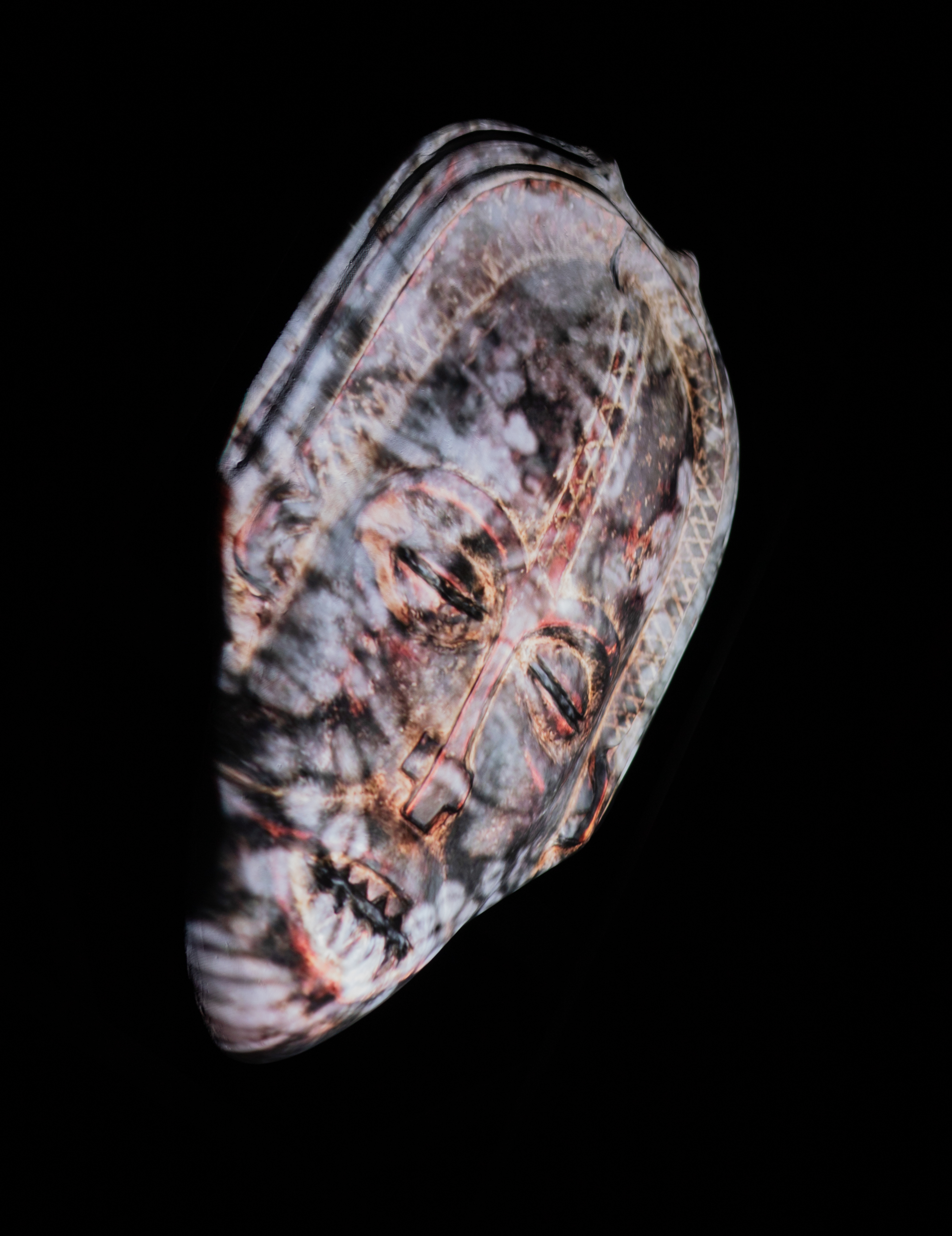

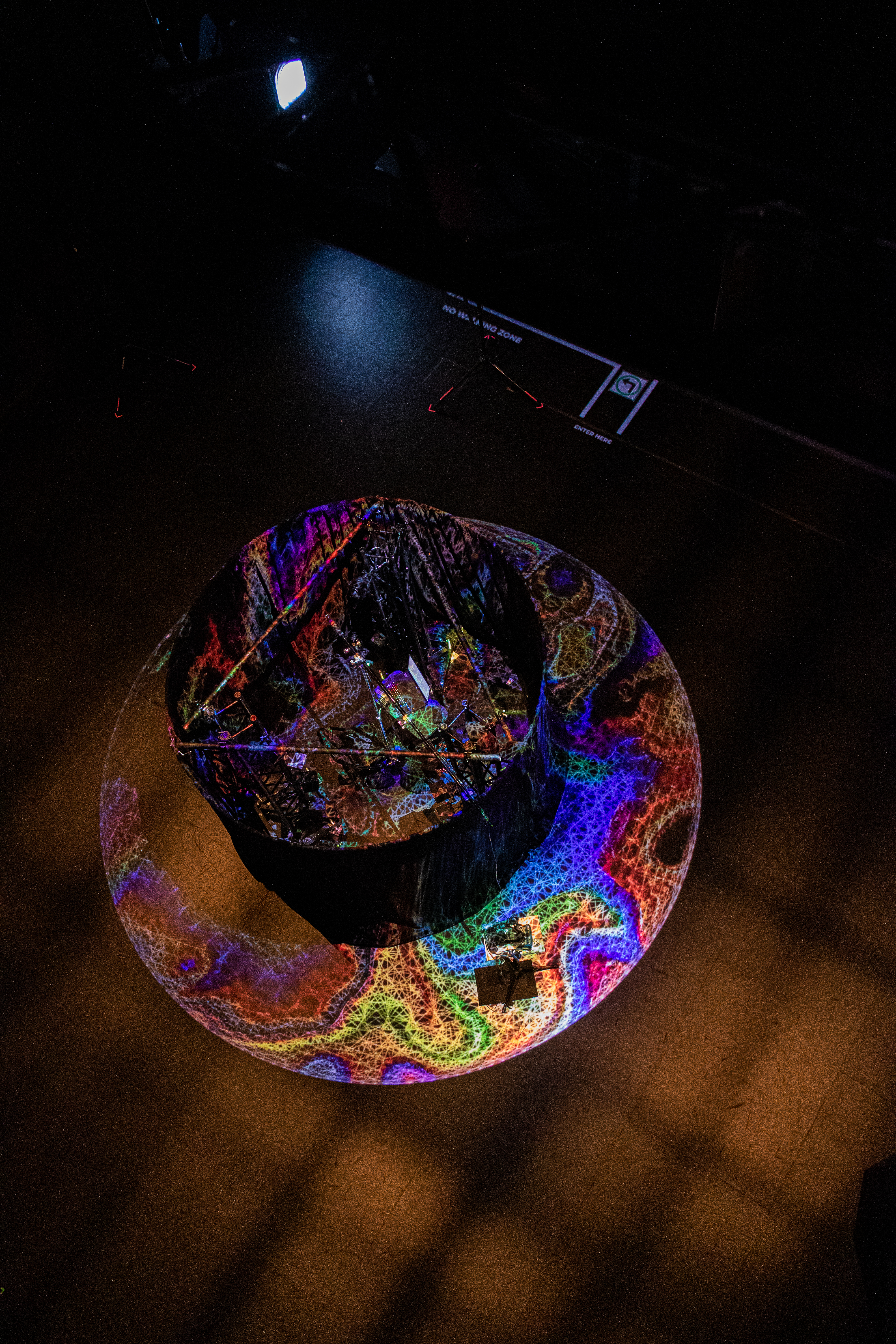

Projection Mapping

The installation extends beyond headsets through projection mapping. A digitally processed and modified mask form serves as a projection surface, allowing the artifact to appear at monumental scale. Mapping the mask's texture and overlaying moving imagery onto a mask-shaped object (instead of a flat screen) preserves its presence while opening up new interactive storytelling possibilities.

Spatial & Directional Sound Design

The project uses spatial audio and directional sound to deepen immersion. Environmental sounds (water, insects, birds) and the voices of objects are placed in space so they can only be heard from specific locations. Sound becomes another way the artifacts “speak” to the visitors.Through the use of a holosonic speaker the mask's monologue is isolated from the rest of te exhibit.

Web-Based AR Prototyping (HTML5, JS, AR.js)

Earlier stages of the project experimented with web-based augmented reality using HTML5, CSS3, JavaScript, and AR.js. This prototype explored how the Congolese users could access digitized artifacts on their phones via a single link and marker system, pointing toward more accessible, browser-based futures for digital repatriation.

XR Framework: AR, VR, and Beyond

The thesis works within a broader XR (extended reality) framework that includes VR, AR, and mixed reality. While the final installation focuses on VR and projection mapping, the methodology intentionally keeps the assets and workflows flexible so they can be adapted to future XR platforms and access points.

Digital Repatriation Workflow

Rather than inventing new hardware, the project’s core method is a sequenced workflow: from physical object → high-resolution photography → photogrammetry → 3D cleanup and texturing → XR-ready assets → VR world + projection mapping + spatial sound. This chain models a practical, repeatable approach to digital repatriation that other artists, museums, and communities can adapt.